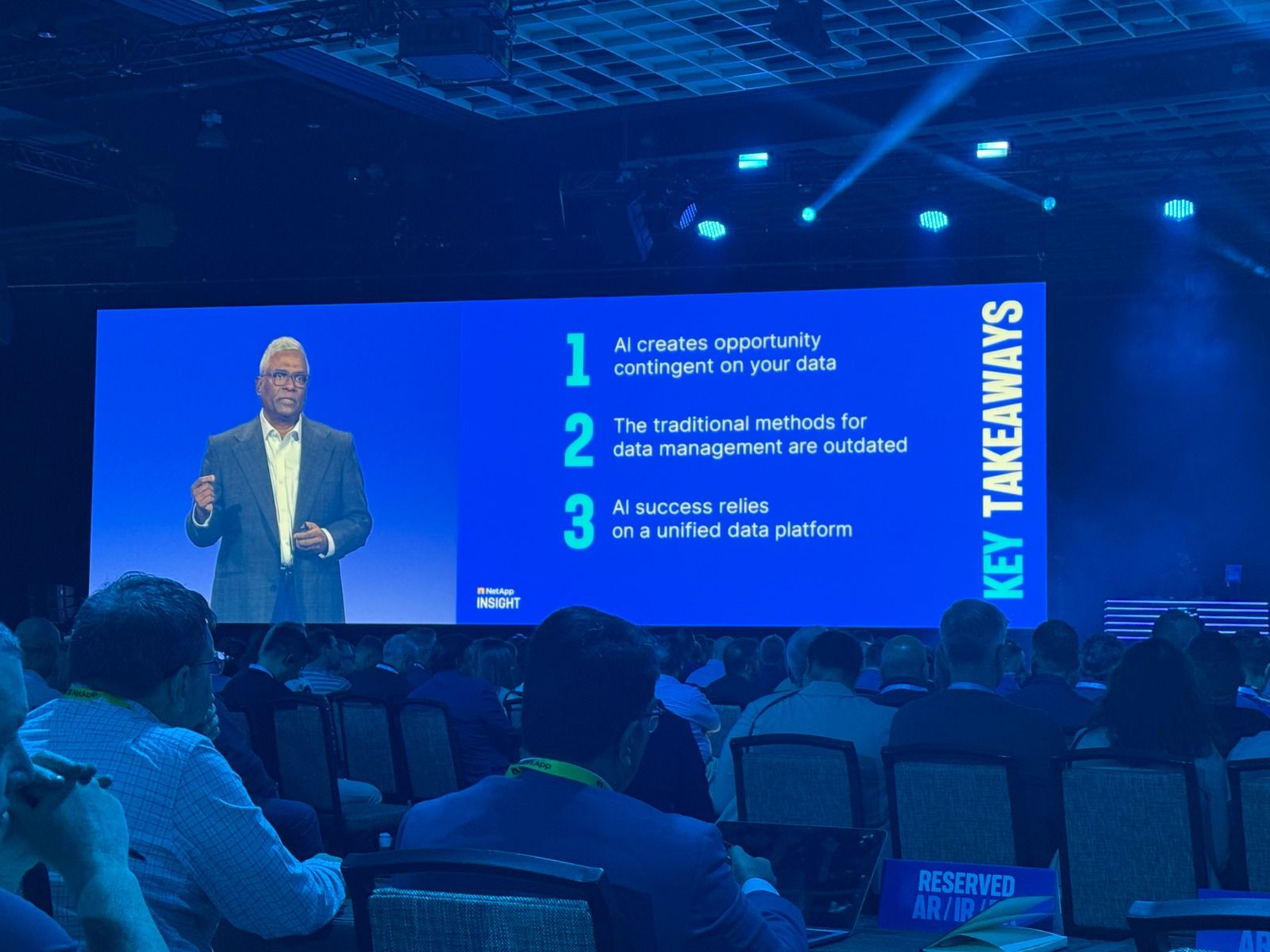

Most AI projects stall because the data is not ready or cannot move and govern at scale.

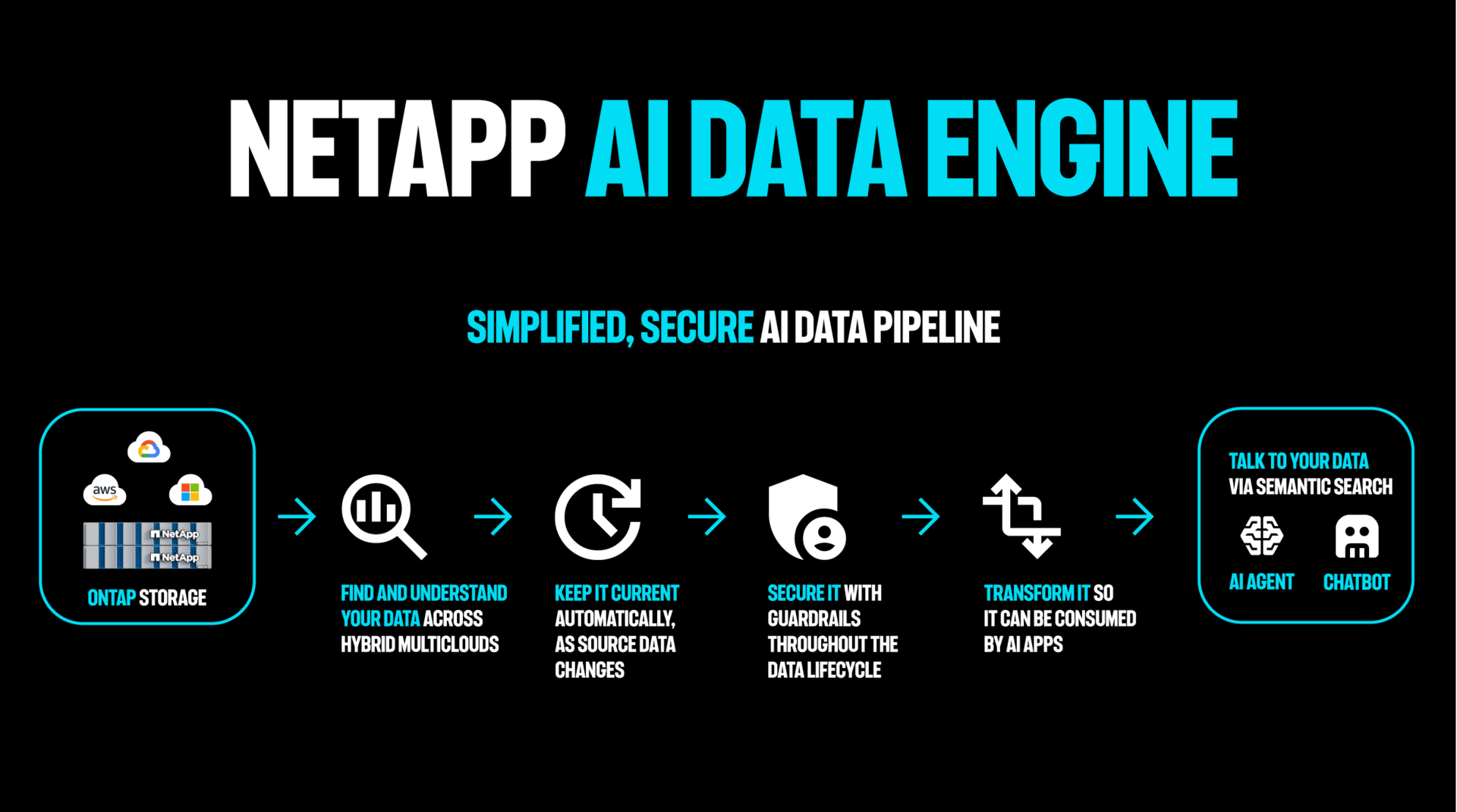

NetApp’s Insight announcements target that gap with a new AI-focused storage architecture and data services on the ONTAP stack that already runs across on-prem and the big three clouds.

Check the announcements below –

Disaggregated storage architecture for unstructured and AI workloads on ONTAP.

Granular scale: grow performance (controllers) and capacity (enclosures) independently.

Designed to reach exabyte-class scale over time.

Same ONTAP APIs and data services that enterprises use today.

Metadata Engine: estate-wide visibility and currency for large unstructured sets.

Data Guardrails: policy-driven protection for sensitive data.

Data Curator: discovery, transformation, and vector embeddings to make data searchable for AI.

Runs alongside AFX with GPU-assisted compute nodes, and aligns with NVIDIA software components.

SnapMirror + FlexCache across AWS FSxN, Azure NetApp Files, and Google Cloud NetApp Volumes to build a single global namespace without full data copies.

Native AI service access: ANF and GCNV exposed to Azure and Google AI stacks for direct use of enterprise data.

NetApp Ransomware Resilience: breach detection and automated, isolated recovery environment.

DR as a Service: expanded support around FSxN and EBS.

Modernization and control

Shift Toolkit: move VMs between VMware, Hyper-V, and KVM formats in minutes since data is not recopied.

NetApp Console: one place to operate storage and data services.

Trident updates for Kubernetes, plus ONTAP 9.18.1 scalability and encryption enhancements.

Why this matters

AI-ready data: You get findable, governed, and current data that AI systems can consume.

One operating model: Same ONTAP across on-prem and cloud reduces re-platforming risk.

Performance without waste: Disaggregated scale means you add only what you need.

Security built in: Breach detection, ransomware defense, and guided clean recovery are first-party features.

Global data access: FlexCache and SnapMirror across clouds enable low-latency AI and analytics where teams work.

My analysis

Enterprises do not want a parallel AI stack that ignores existing controls. They want AI on the platform they already trust. NetApp’s move is not another point product. It is ONTAP extended for AI with three valuable services: visibility, policy, and retrieval. The cloud updates are quiet but important.

A global namespace that spans AWS, Azure, and Google Cloud removes a common AI friction point: stale copies. The ransomware work also matters because successful AI programs will draw on sensitive data, and recovery must be clean and fast.

The bet here is pragmatic: keep the control plane and protection model stable, add AI-grade scale and data services, and meet teams where they already run workloads.

Who should care

CIOs and Heads of Platform building an AI foundation across hybrid and multi-cloud

Directors of Data/AI who need cataloging, guardrails, and embeddings without a new data islandInfra and SecOps teams who must prove resilience and recovery before AI expands

Fast facts

Runs on ONTAP with the same APIs and data services

Disaggregated scale for performance and capacity growth

Native ties into Azure and Google AI services

Ransomware detection and isolated recovery built in

Global namespace across AWS, Azure, and Google Cloud

What to watch next

Real-world AFX clusters attached to GPU farms.

Adoption of the AI Data Engine for enterprise RAG and agent use cases.

Broader partner reference architectures with Cisco FlexPod and cloud AI stacks.

Call to action

If you are planning an AI rollout and already use ONTAP on-prem or in the cloud, this is a direct path to production.

Best,

Ravit Jain

Founder & Host of The Ravit Show